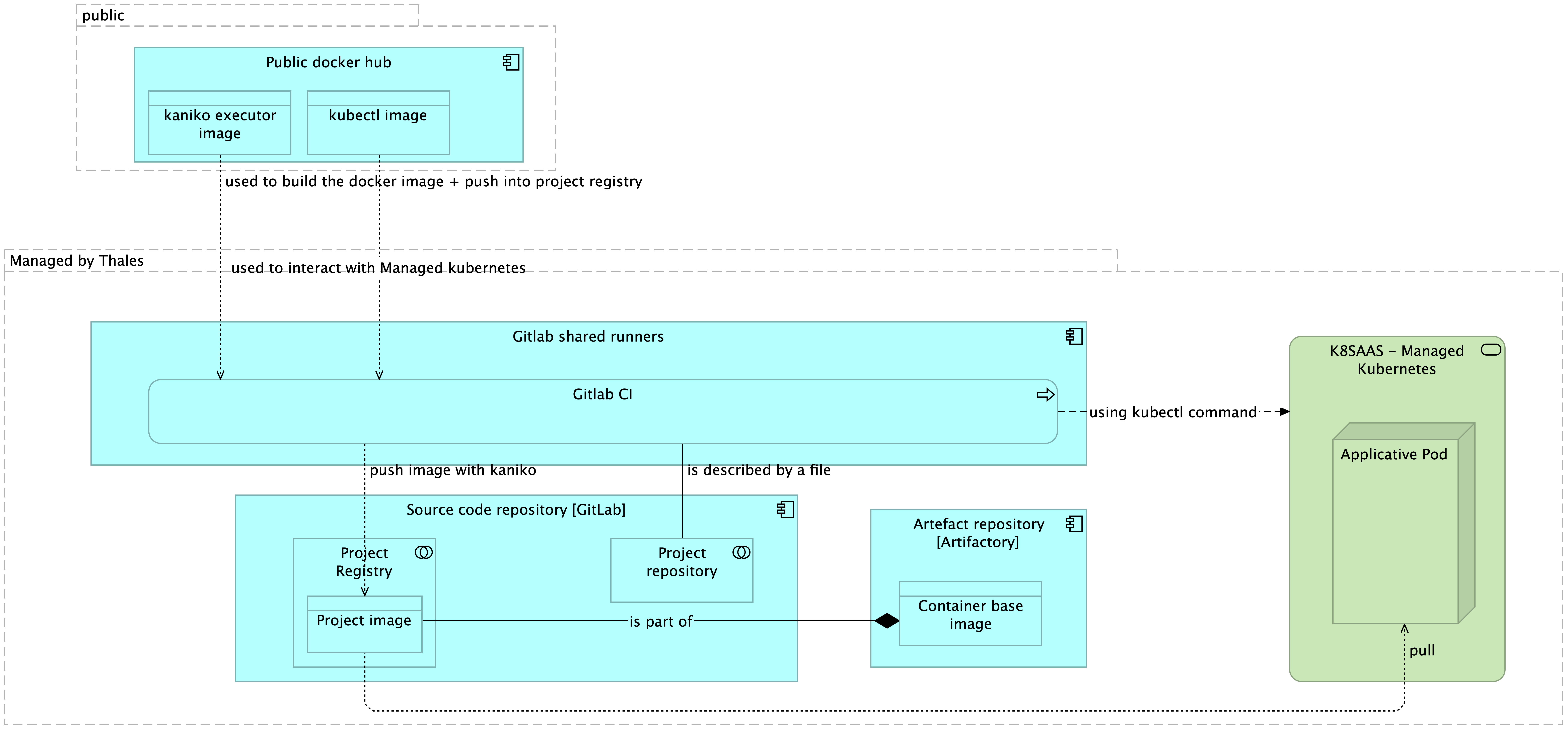

Use Thales Container Base Images

Introduction

Statistics from https://vulnerablecontainers.org show that more than 60% of the top 1000 downloaded images on hub.docker.com are vulnerable.

K8SaaS team recommend to use Thales Approved Container Base Images for running your application.

The purpose of this documentation is to point you to the right gitlab repositories & artefacts + show you step by step what to do with a quick example.

Tutorial

In this tutorial, you're going to:

- Discover the Container Base Images Catalog approved by Thales

- Deploy a simple helloworld application based on a base image

Note:

- only the application developed by Thales engineers used the base image.

- the pipeline CI uses public images (risk less important: temporary actions)

- Base images are stored in artefactory/Jfrog

- Project image (based on base image) is stored on gitlab registry (because project focused)

Container Base Images Catalog approved by Thales

Please, look at this gitlab repository

Deploy a simple helloworld application based on a base image

Prerequisites

- You should have access to a k8saas cluster with the developer-role rights (minimum)

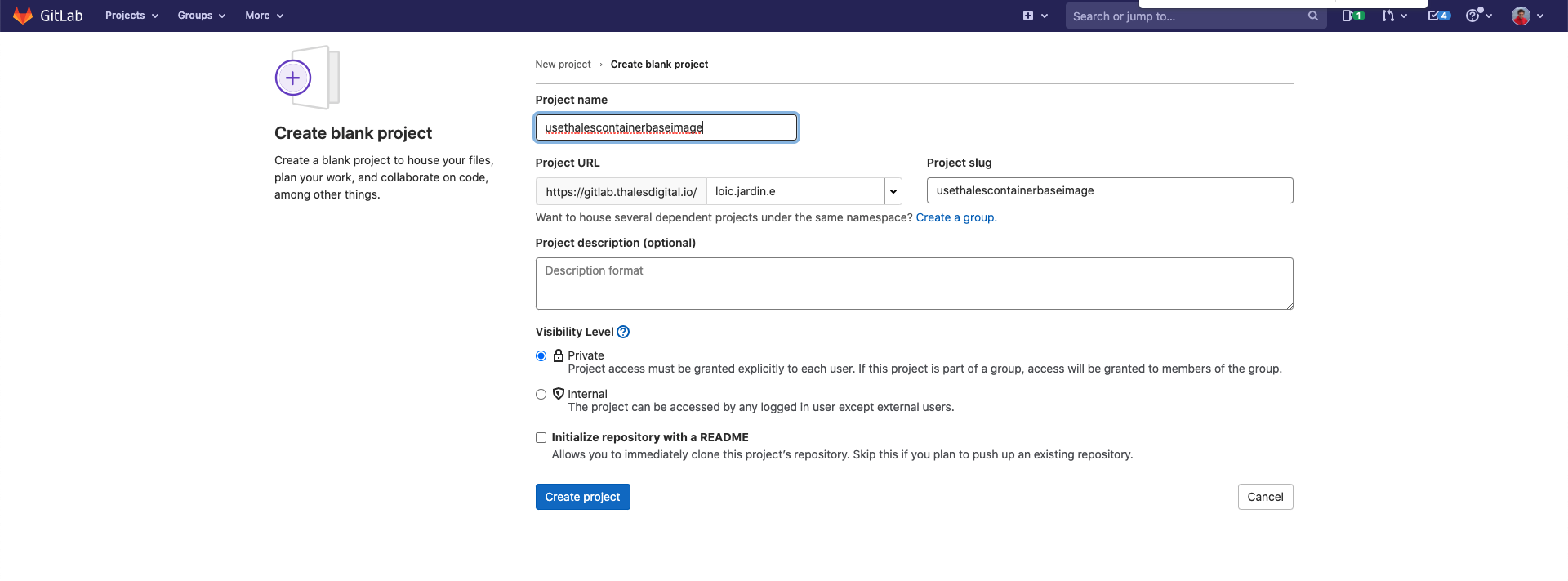

First create a git project

Then, clone it on your laptop:

# in my case:

$ git clone https://gitlab.thalesdigital.io/loic.jardin.e/usethalescontainerbaseimage.git

# Update the path with your repository

Create a Dockerfile

touch Dockerfile

Then, fill the Dockerfile using:

- base image documentation

- the following example

FROM artifactory.thalesdigital.io/docker-internal/base-images/amd64/alpine:latest

# Installation of packages shall be done as root

USER root

RUN \

apk add --update wget && \

rm -rf /var/cache/apk/*

# Back to bob as default

USER bob

CMD ["echo", "Welcome to the k8saas membership team!"]

create a .gitlab-ci.yml file

touch .gitlab-ci.yml

fill it with

stages:

- build

- deploy_application

variables:

KUBECONFIG: ${CI_PROJECT_DIR}/kube_config

DOCKER_IMAGE_TAG: ${CI_PIPELINE_ID}-${CI_COMMIT_SHA}

build:image:

stage: build

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: [""]

retry: 2

script:

- mkdir -p /kaniko/.docker

- echo $DOCKER_AUTH_CONFIG > /kaniko/.docker/config.json

- /kaniko/executor --context $CI_PROJECT_DIR --dockerfile $CI_PROJECT_DIR/Dockerfile --destination $CI_REGISTRY_IMAGE:$DOCKER_IMAGE_TAG

deploy:helloworld:

stage: deploy_application

dependencies:

- build:image

image: bitnami/kubectl:latest

before_script:

- echo ${KUBE_CONFIG_K8SAAS} | base64 -d > ${KUBECONFIG}

- export KUBECONFIG=${KUBECONFIG}

- sed -i "s%TOBEREPLACE%$CI_REGISTRY_IMAGE:$DOCKER_IMAGE_TAG%g" kubernetes_deployment.yaml

script:

- kubectl apply -f kubernetes_deployment.yaml -n $NAMESPACE_K8SAAS

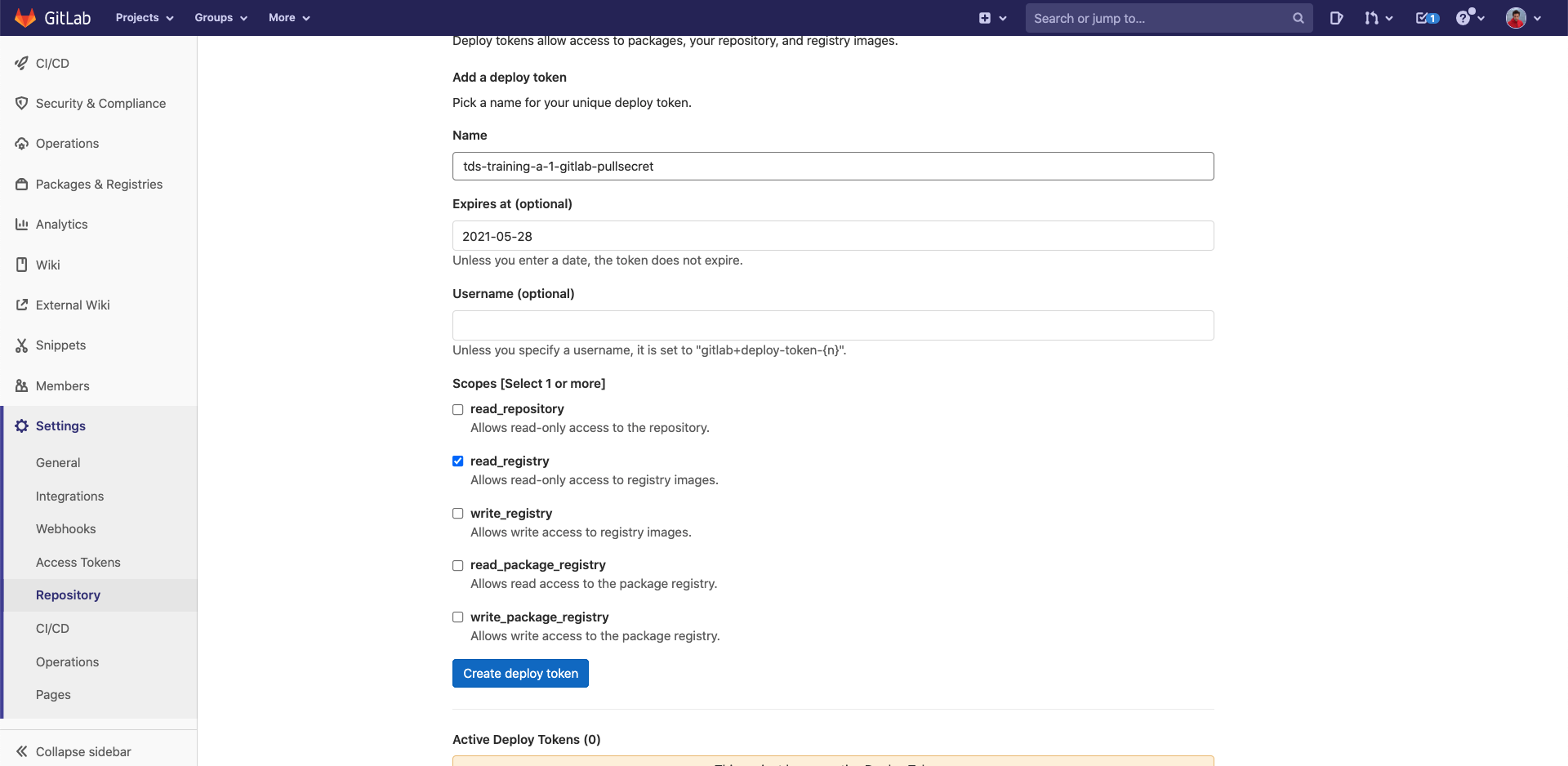

allow kubernetes to download docker image

In your gitlab project:

- Click on "Settings", then "Repository", then "Deploy tokens"

Fill the fields with the following info:

- Name: usethalescontainerbaseimage-pulltoken

- Expires at: precise a date

- tick "read_registry"

- Click on "Create deploy token"

Now copy the username and the token.

We are going to create a secret into kubernetes that contains the gitlab token

source: Kubernetes official documentation

$ kubectl hns create dev -n customer-namespaces

$ kubectl create secret docker-registry usethalescontainerbaseimage-pulltoken --docker-server=registry.thalesdigital.io --docker-username=TOKEN_USERNAME --docker-password=TOKEN_VALUE -n dev

secret/usethalescontainerbaseimage-pulltoken created

This token will be used by kubernetes to pull your image in the next steps.

create a simple kubernetes deployment

touch kubernetes_deployment.yaml

Fill it with:

apiVersion: apps/v1

kind: Deployment

metadata:

name: usethalescontainerbaseimage

spec:

replicas: 1

selector:

matchLabels:

app: usethalescontainerbaseimage

template:

metadata:

labels:

app: usethalescontainerbaseimage

spec:

containers:

- name: usethalescontainerbaseimage

image: TOBEREPLACE

imagePullSecrets:

- name: usethalescontainerbaseimage-pulltoken

Final steps

CI environment variable DOCKER_AUTH_CONFIG is also propagate to kubernetes environment to allow docker image from binary repository.

Create the following variables in Settings / CI/CD / Variables:

- NAMESPACE_K8SAAS containing the namespace of k8saas.

- KUBE_CONFIG_K8SAAS containing the generic ci-cd kubeconfig. Doc do get it here. Please encode it in base64.

- DOCKER_AUTH_CONFIG containing the following data. Please keep the $CI_REGISTRY, $CI_REGISTRY_USER, $CI_REGISTRY_PASSWORD as is.

{

"auths": {

"artifactory.thalesdigital.io": {

"username":"<<YOUR_EMAIL_ADDRESS>>",

"password":"<<YOUR_JFORG_API_TOKEN>>"

},

"$CI_REGISTRY": {

"username": "$CI_REGISTRY_USER",

"password": "$CI_REGISTRY_PASSWORD"

}

}

}

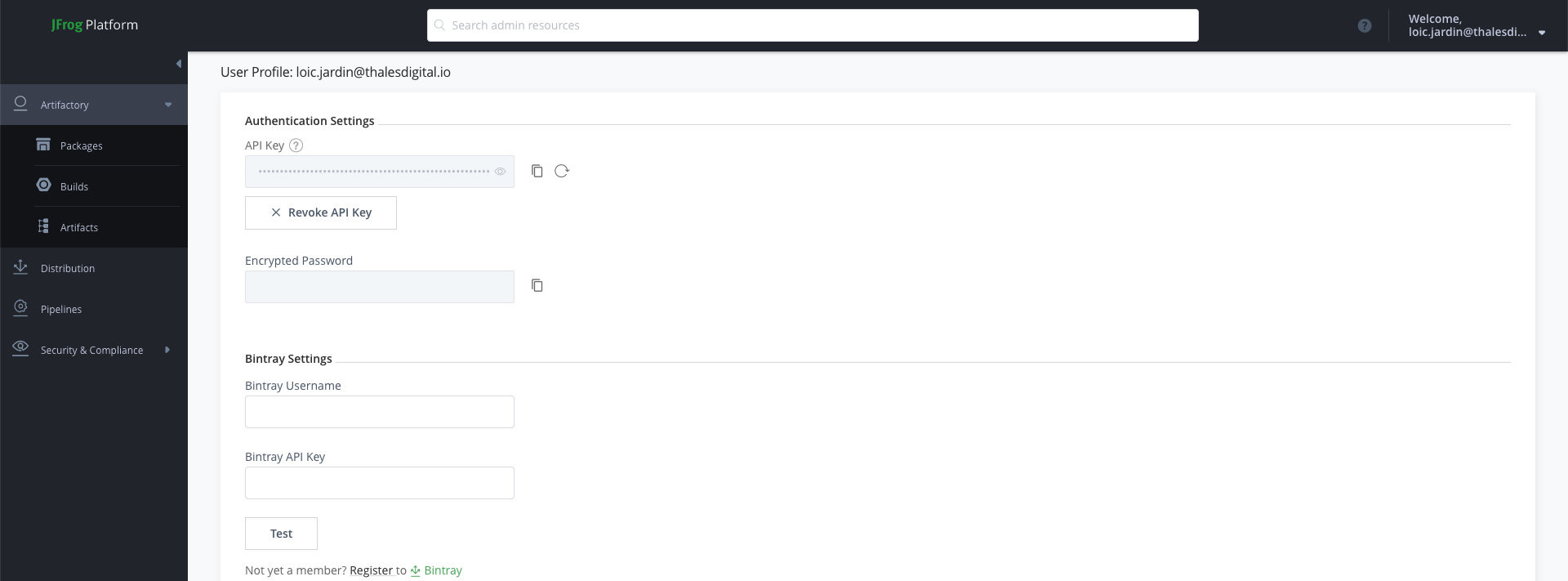

HOW TO get the API TOKEN in Jfrog ?

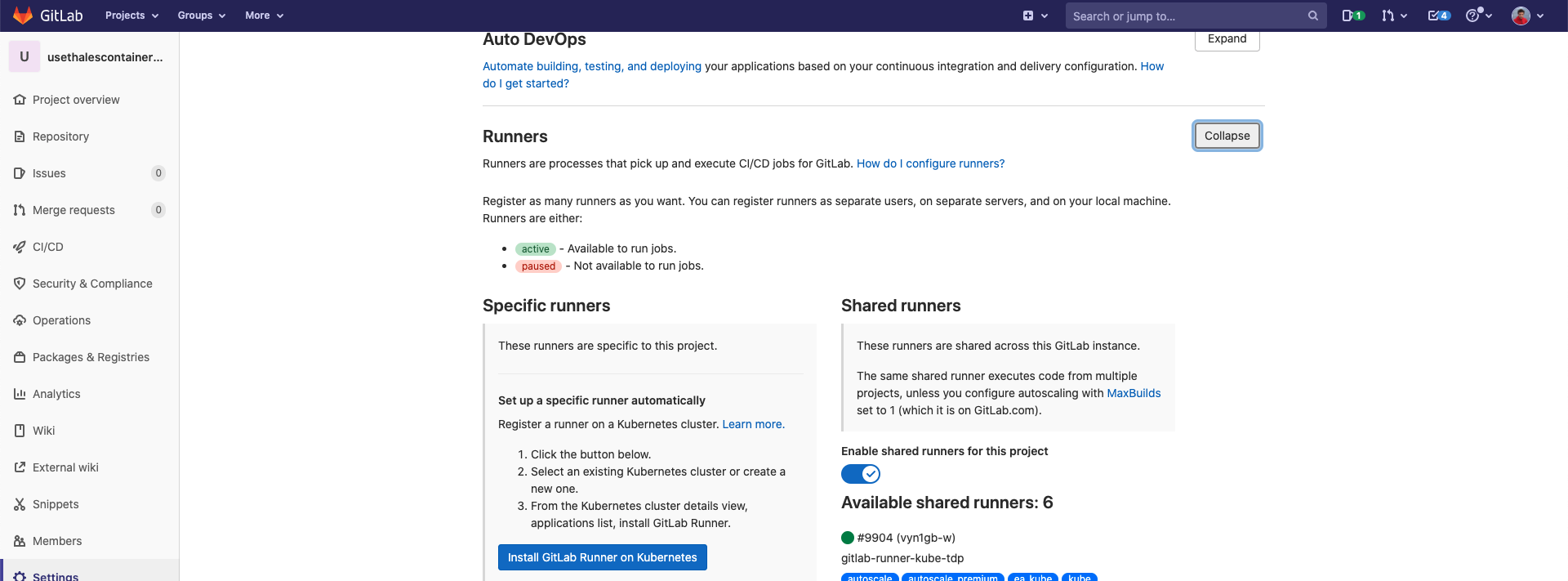

Usually, you should enable shared runners for this project before pushing the project into git:

Then git commit and push into master:

$ git add .

$ git commit -m "my first commit"

[master (root-commit) bfacdad] first commit

3 files changed, 63 insertions(+)

create mode 100644 .gitlab-ci.yml

create mode 100644 Dockerfile

create mode 100644 kubernetes_deployment.yaml

$ git push origin master

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 16 threads

Compressing objects: 100% (5/5), done.

Writing objects: 100% (5/5), 1.36 KiB | 1.36 MiB/s, done.

Total 5 (delta 0), reused 0 (delta 0)

To https://gitlab.thalesdigital.io/loic.jardin.e/usethalescontainerbaseimage.git

* [new branch] master -> master

Test your deployment

get the events:

% kubectl get events -n YOUR_NAMESPACE

You should see those lines:

0s Normal Pulling pod/usethalescontainerbaseimage-7f6c64b574-54xpf Pulling image "registry.thalesdigital.io/loic.jardin.e/usethalescontainerbaseimage:945363-998a0c3acc7179ddc2d53732d2a668f8ccc4300b"

0s Normal Pulled pod/usethalescontainerbaseimage-7f6c64b574-54xpf Successfully pulled image "registry.thalesdigital.io/loic.jardin.e/usethalescontainerbaseimage:945363-998a0c3acc7179ddc2d53732d2a668f8ccc4300b" in 5.663751434s

The look at the pods in your namespace:

% kubectl get pods -n YOUR_NAMESPACE

usethalescontainerbaseimage-7f6c64b574-54xpf 1/2 NotReady 2 33s

Note: the pod is not a daemon, so "NotReady Status" is normal :)

Get the logs of the container:

kubectl logs usethalescontainerbaseimage-7f6c64b574-54xpf -n dev -c usethalescontainerbaseimage

Welcome to the k8saas membership team!

TADA ! You just deploy your single container using a thales approved based image.