Pod to Pod Encryption with Linkerd

Context

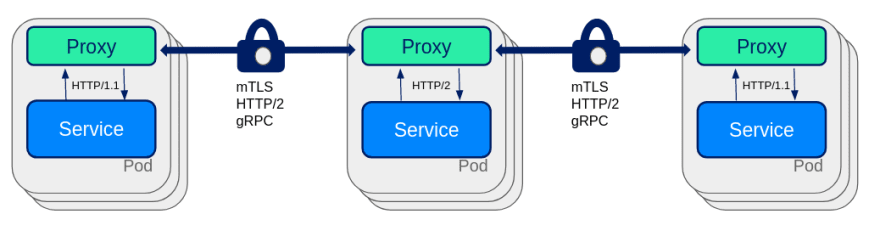

K8SaaS provides a transparent mTLS that encrypt all the communications between the pods.

Use case

- Start developing from scratch safely by securing the data confidentiality

- Delegate the certificate management to k8saas: generation, rotation, policy

What to do ?

Nothing ! The service is enabled by default.

HOWTO

Disable the pod to pod mTLS encryption ?

Note: Make sure the network flow is encrypted by the applicative level before disabling the encryption. Contact your security partner to validate the exception if it is not the case.

You can disable completely the encryption by disabling the injection of linkerd.io side-car. To do so, add the following annotation to your pods:

spec:

template:

metadata:

annotations:

linkerd.io/inject: disabled

You can also disable the encryption only for specific ports:

spec:

template:

metadata:

annotations:

config.linkerd.io/skip-inbound-ports: 8080

config.linkerd.io/skip-outbound-ports: 8080

Working with network policy

By design, k8saas reinforce network segregation by isolating the cluster within its own virtual network. But sometimes additional security requirements necessitate isolating the workload within the cluster.

With linkerd, certain flows must be open in order not to break the communication between the control plane and the data plane.

Policy allow-linkerd-control-plane-egress must be applied to enable communication with the linkerd control plane.

- allow-linkerd-control-plane-egress

- kube-dns

- deny-all

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-linkerd-control-plane-egress

spec:

podSelector: {}

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: linkerd

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-kube-dns

spec:

egress:

- ports:

- port: 53

protocol: UDP

- port: 53

protocol: TCP

to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

podSelector:

matchLabels:

k8s-app: kube-dns

podSelector: {}

policyTypes:

- Egress

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

spec:

podSelector: {}

policyTypes:

- Egress

Working with job

When job is completed, pod i still running because linker-proy hang it. This is because the Linkerd proxy container is not designated as a sidecar container, so it doesn’t exit when the main job container finishes.

There’re few workaround for this issue.

- Disable injection, add the

linkerd.io/inject: disabledannotation to the job’s pod spec, so that the pod won’t be injected with the proxy. Please note that the annotation needs to be on the pod spec itself, not at the CronJob template level. This option is only valid when the job does not call other mesh pods. - Call linkerd shutdown hook

http://localhost:4191/shutdownhook at the end of process

apiVersion: batch/v1

kind: CronJob

metadata:

name: my-job

spec:

concurrencyPolicy: Allow

failedJobsHistoryLimit: 1

jobTemplate:

metadata:

creationTimestamp: null

name: my-job

spec:

template:

metadata:

creationTimestamp: null

spec:

containers:

- command:

- /bin/sh

- -c

- echo "john" && curl -XPOST http://localhost:4191/shutdown

image: curlimages/curl

imagePullPolicy: Always

name: my-job

schedule: "*/1 * * * *"

successfulJobsHistoryLimit: 3

suspend: false

status: {}

- Implement your own sidecar to call shutdown hook. see sample here

- Use linker-await

TROUBLESHOOTING

Performance issues

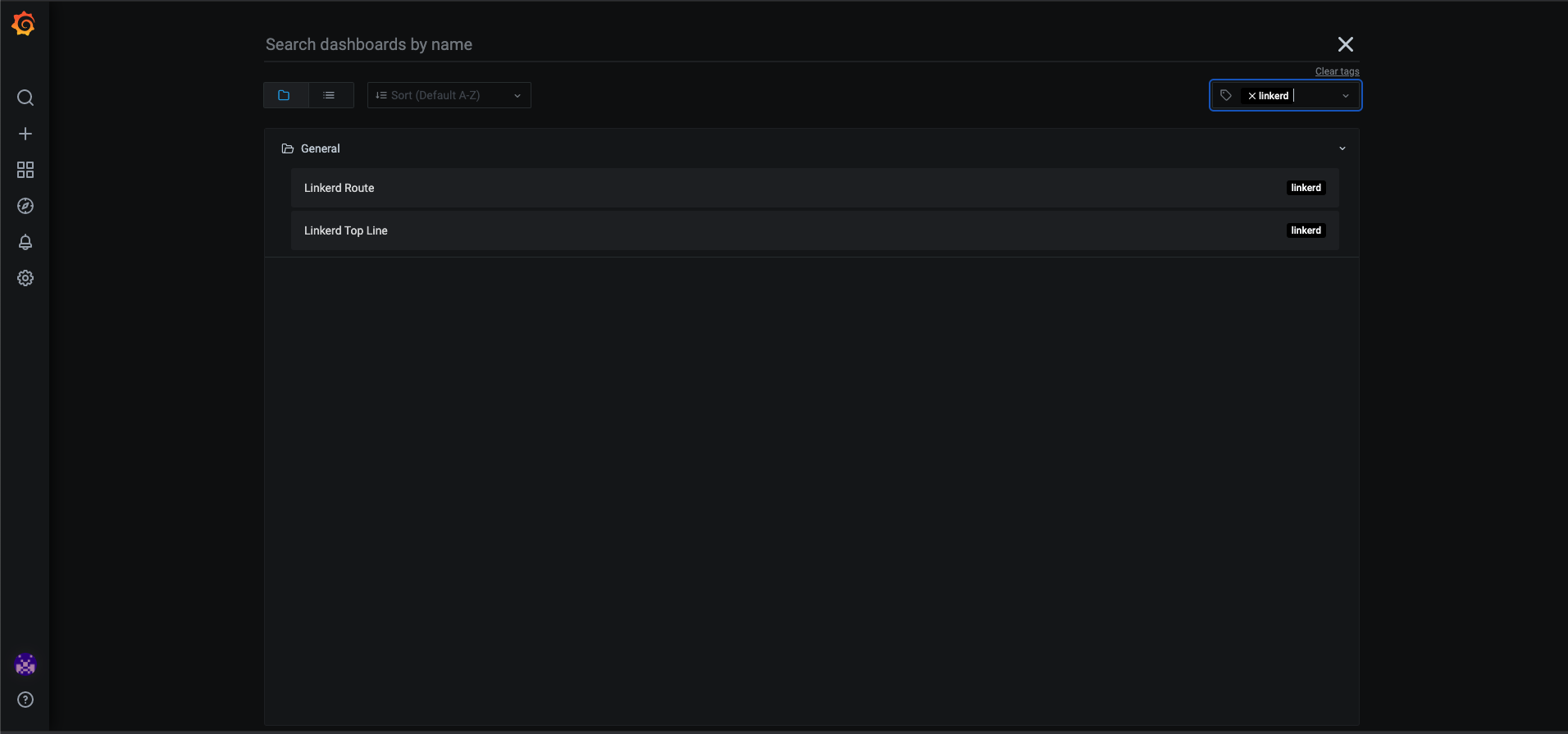

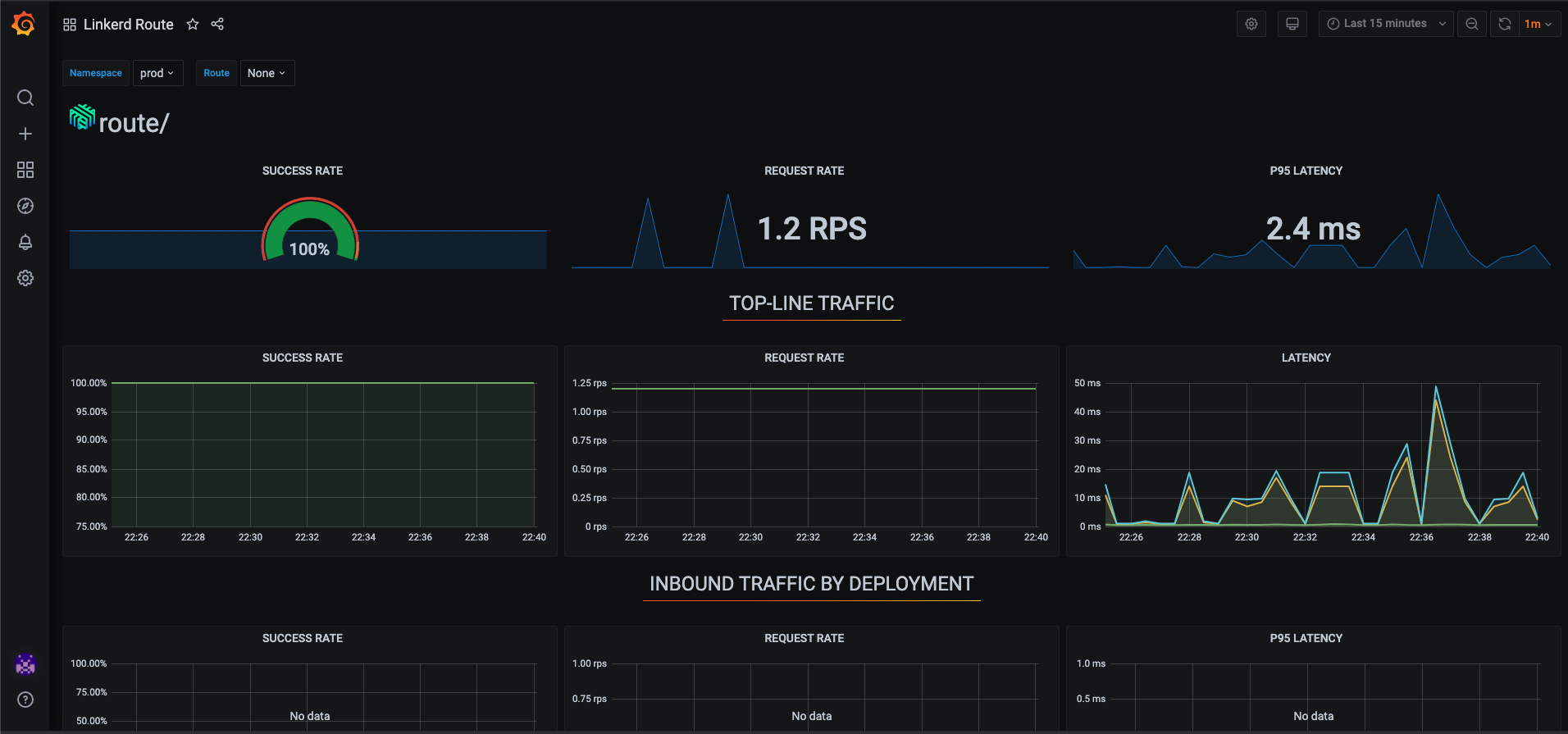

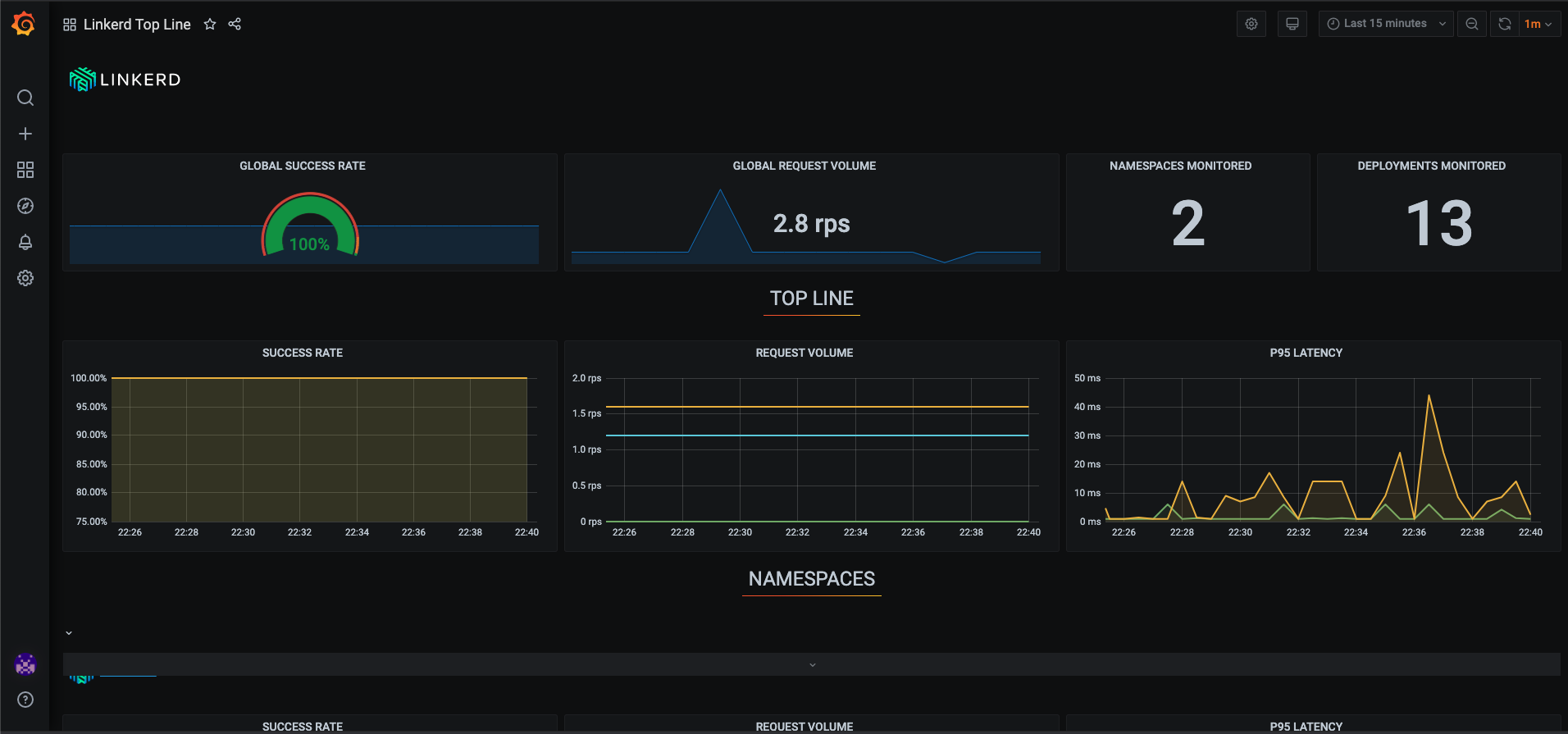

K8SaaS provides a Monitoring console based on Grafana. To learn how to access it, have a look at the getting started page.

Then you should have at least 2 dashboards:

- Linkerd Route

- Linkerd Top Line

Linkerd viz

The Linkerd Viz is a part of the Linkerd service mesh that provides a full on-cluster metrics stack,. It provides a high-level view of what is happening with your services in real time. Features

- Top Line Metrics: These are the “golden” metrics for each deployment: Success rates, Request rates, and Latency distribution percentiles.

- Deployment Detail: Provides detailed information about each deployment.

- Top: Gives a real-time view of which paths are being called.

- Tap: Shows the stream of requests across a single pod, deployment, or even everything in the namespace.

Linkerd Viz only be used for debugging purposes and not left running in production environments, as it may impact performance.

Linkerd Viz can be requested via generic postit request

TcpDump

Sometimes we need to have network visibility on linkded-proxy.

To enable the debug sidecar, you can add the config.linkerd.io/enable-debug-sidecar: "true" annotation to your pod specification. This can be done manually in the pod’s YAML file, or automatically by using the linkerd inject --enable-debug-sidecar command when injecting the Linkerd proxy.

kubectl get -o yaml deployment my-deployment | linkerd inject --enable-debug-sidecar - | kubectl apply -f -

more details here

Next step

Go back to homepage