Persist data for your applications

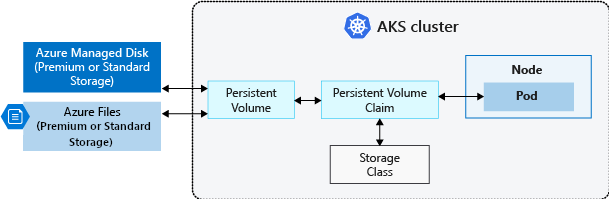

Kubernetes typically treats individual pods as ephemeral, disposable resources. Applications have different approaches available to them for using and persisting data. A volume represents a way to store, retrieve, and persist data across pods and through the application lifecycle.

Traditional volumes are created as Kubernetes resources backed by Azure Storage. You can manually create data volumes to be assigned to pods directly, or have Kubernetes automatically create them. Data volumes can use Azure Disks or Azure Files.

Applications running k8saas may need to store and retrieve data. While some application workloads can use local, fast storage on unneeded, emptied nodes, others require storage that persists on more regular data volumes within the Azure platform.

Persistent volumes

A persistent volume represents a piece of storage that has been provisioned for use with Kubernetes pods. A persistent volume can be used by one or many pods, and can be dynamically provisionned

Persistent volume claims

Once an available storage resource has been assigned to the pod requesting storage, PersistentVolume is bound to a PersistentVolumeClaim. Persistent volumes are 1:1 mapped to claims.

Storage Class

To define different tiers of storage, such as Premium and Standard, you can create a StorageClass.

The StorageClass also defines the reclaimPolicy. When you delete the pod and the persistent volume is no longer required, the reclaimPolicy controls the behavior of the underlying Azure storage resource. The underlying storage resource can either be deleted or kept for use with a future pod.

Storage classes available in k8saas offer

| Storage class | Provisionner (AKS) | Reclaim Policy | Disk Type | Expandable | Legacy |

|---|---|---|---|---|---|

| azurefile | file.csi.azure.com | Delete | Standard_LRS | Yes | No |

| azurefile-csi | file.csi.azure.com | Delete | Standard_LRS | Yes | No |

| azurefile-csi-premium | file.csi.azure.com | Delete | Premium_LRS | Yes | No |

| azurefile-csi-premium-zrs | file.csi.azure.com | Delete | Premium_LRS | Yes | No |

| azurefile-csi-zrs | file.csi.azure.com | Retain | Standard_ZRS | Yes | No |

| azurefile-premium | file.csi.azure.com | Delete | Premium_LRS | Yes | No |

| azurefile-premium-zrs | file.csi.azure.com | Retain | Premium_ZRS | Yes | No |

| default | disk.csi.azure.com | Delete | StandardSSD_ZRS | Yes | No |

| managed | disk.csi.azure.com | Delete | StandardSSD_LRS | Yes | No |

| managed-premium | disk.csi.azure.com | Delete | Premium_ZRS | Yes | No |

| managed-csi | disk.csi.azure.com | Delete | StandardSSD_ZRS | Yes | No |

| managed-csi-zrs | disk.csi.azure.com | Delete | StandardSSD_ZRS | Yes | No |

| managed-csi-premium-zrs | disk.csi.azure.com | Delete | Premium_ZRS | Yes | No |

| default-retain | azure-disk | Retain | StandardSSD_LRS | Yes | Yes |

| super-ssd | azure-disk | Retain | Premium_LRS | Yes | Yes |

If existing storage does not fit your need. Please open request on PostIt

Legacy storage class should not be used, as azure-disk were deprecated since AKS 1.24 and can be removed at any time.

Which provisionner should I use ?

- disk.csi.azure.com

Use Azure Disks to create a Kubernetes DataDisk resource. Disks can use:

Azure Premium storage, backed by high-performance SSDs, or Azure Standard storage, backed by regular HDDs. Since Azure Disks are mounted as ReadWriteOnce, they're only available to a single pod. For storage volumes that can be accessed by multiple pods simultaneously, use Azure Files.

- file.csi.azure.com

Azure Files Use Azure Files to mount an SMB 3.0 share backed by an Azure Storage account to pods. Files let you share data across multiple nodes and pods and can use:

Azure Premium storage, backed by high-performance SSDs, or Azure Standard storage backed by regular HDDs.

Retain Policy

For dynamically provisioned PersistentVolumes, the default reclaim policy is "Delete". This means that a dynamically provisioned volume is automatically deleted when a user deletes the corresponding PersistentVolumeClaim. This automatic behavior might be inappropriate if the volume contains precious data. In that case, it is more appropriate to use the "Retain" policy. With the "Retain" policy, if a user deletes a PersistentVolumeClaim, the corresponding PersistentVolume will not be deleted. Instead, it is moved to the Released phase, where all of its data can be manually recovered.

default-retain uses a specific reclaim policy "Retain".

Since we use Velero for PV(PersistentVolume) backup, default-retain class should not be used and will be deprecated.

How to attach a disk to my pods ?

First, we have to create a PersistantVolumeClaim (PVC) with the desired storageClass, storage size

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: k8saas-helloworld-claim

namespace: customer-namespaces

spec:

accessModes:

- ReadWriteOnce # Mandatory for azure-disk, you can use ReadWriteMany with azure-file

storageClassName: managed

resources:

requests:

storage: 5Gi

- Note : ReadWriteOnce indicates you can mount this PVC in one only pod.

Once a PersistantVolumeClaim is applied, a PersistentVolume is dynamically provisionned

Then you specify your pod to use the created PVC (the claimName field has to match with PVC name):

kind: Pod

apiVersion: v1

metadata:

name: mypod

namespace: customer-namespaces

spec:

containers:

- name: myk8saasdemopod

image: k8saas/mypod:latest

volumeMounts:

- mountPath: "/conf"

name: volume

volumes:

- name: volume

persistentVolumeClaim:

claimName: k8saas-helloworld-claim

Useful commands

List your PVCs

kubectl get pvc -n customer-namespaces

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

customer-namespaces k8saas-helloworld-claim Bound pvc-23720b6e-32a9-42c6-ab7c-9b1110680fd4c 5Gi RWO managed-premium 1h

Why my PVC status is Pending ?

Check the status of Claim by checking the events

Exemple:

kubectl get events -n customer-namespaces

LAST SEEN TYPE REASON OBJECT MESSAGE

105s Normal ExternalProvisioning persistentvolumeclaim/pvc-azuredisk-shared waiting for a volume to be created, either by external provisioner "exemple" or manually created by system administrator

Feel free to contact the support by opening a Zendesk ticket

How to Extend a volume

- Stop the pod(s) using the PVC

- Update the resources.requests.storage field with the new size.

- Recreate the pods

Limitations

For example, if you want to use a disk of size 4 TiB, you must create a storage class that defines cachingmode: None because disk caching isn't supported for disks 4 TiB and larger.

Note that it's not supported to reduce the size of a PVC (to prevent data loss). You can edit an existing storage class by using the kubectl edit sc command.