Azure CNI Overlay

Context

What is CNI ?

CNI (Container Network Interface) is a framework for dynamically configuring networking resources. It uses a group of libraries and specifications written in Go. The plugin specification defines an interface for configuring the network, provisioning IP addresses, and maintaining connectivity with multiple hosts.

What are we using now : Azure CNI with Flat network model. A flat network model in AKS assigns IP addresses to pods from a subnet from the same Azure virtual network as the AKS nodes. Any traffic leaving your clusters isn't SNAT'd, and the pod IP address is directly exposed to the destination. This model can be useful for scenarios like exposing pod IP addresses to external services.

With azure CNI by default there is one ip per pod : it is great for cybersecurity but brings a limitation on the number of pods according to the ip range. Azure CNI Overlay is a solution to remove this limitation.

Objectives

For customers :

- not being limited on number of pods

For operations :

- reduce needed ip range to /27

For security :

- able to trigger traffic between pod to pod on the same node

- Still able to monitor egress and ingress

- history of communications

Solution : Azure CNI Overlay

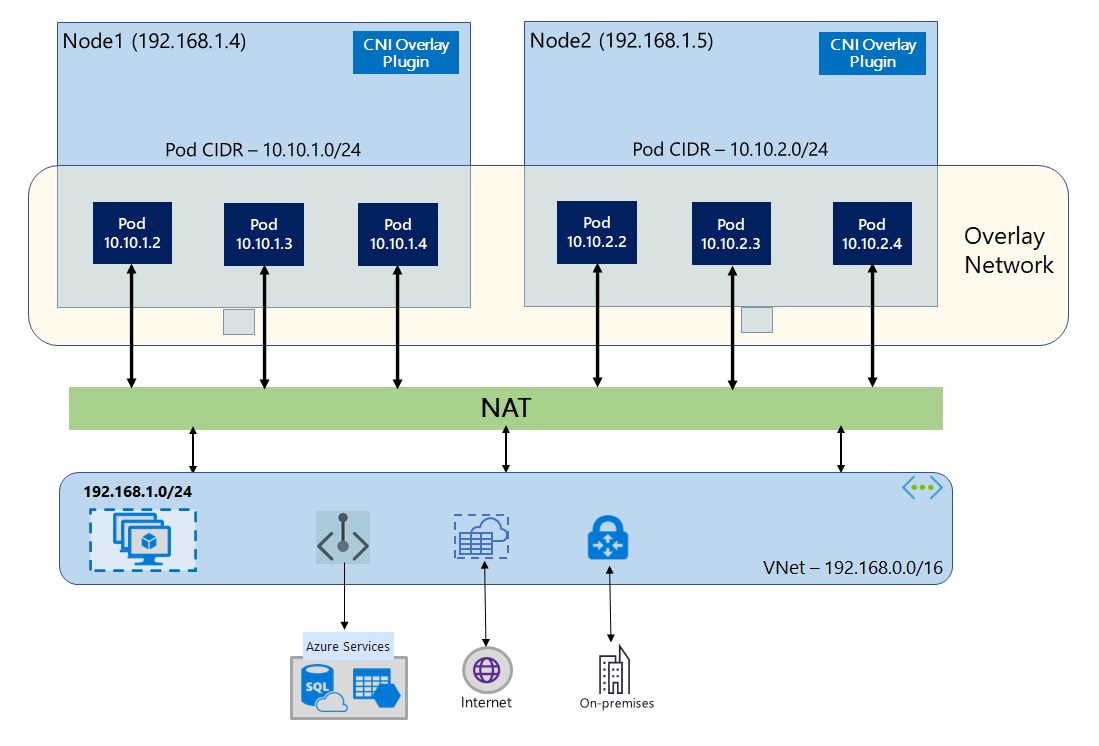

With Azure CNI Overlay :

- the cluster nodes are deployed into an Azure Virtual Network (VNet) subnet.

- Pods are assigned IP addresses from a private CIDR logically different from the VNet hosting the nodes.

- Pod and node traffic within the cluster use an Overlay network

- Network Address Translation (NAT) uses the node's IP address to reach resources outside the cluster.

This solution saves a significant amount of VNet IP addresses and enables us to scale our clusters to large sizes. An extra advantage is that we can reuse the private CIDR in different AKS clusters, which extends the IP space available for containerized applications in Azure Kubernetes Service (AKS).

We could also reduce our cluster ip range from /24 to /27 by default.

The pod limit is by default set to the value of 30 pod per node.

Network configuration

There is 4 network configurations for AKS :

- Azure CNI flat network : default one ip per pod

- Azure CNI Overlay : one ip per node, an overlay network with a private ip range for the pods

- Azure CNI Overlay with Cilium : Not supported

- Bring your own CNI : Not supported

Here are some core features :

| Features | Azure CNI flat network | Azure CNI Overlay |

|---|---|---|

| Network | One ip per pod and node | One ip per node |

| Max number of pods | limited by IP range | 250 per node, 100 nodes = 25000 pods |

| Managed by Azure | Yes | Yes |

| Minimum ip range needed | /24 | /27 |

| Commnication service to pod | Kube-proxy | kube-proxy |

| Monitoring pod to pod | Nsg flow logs | Need to pay for enable advanced monitoring addon |

| Persistence Logs egress / ingress | Nsg flow logs | Nsg flow logs |

| Persistence Logs pod to pod on same node | Nsg flow logs | None |

| Azure Application Gateway with Gateway API | Available | No |

Why use /27 instead of /24 ?

- saving our ip ranges, to save for the future

- allow us to be more flexible for new process like using another /27 for blue green, or used a /28 range for another feature like Firewall

Security

By nature of the overlay network, all communication egress and ingress are still capture by the virtual network (with the node ip) but we cannot monitor pod to pod communication on the same node.

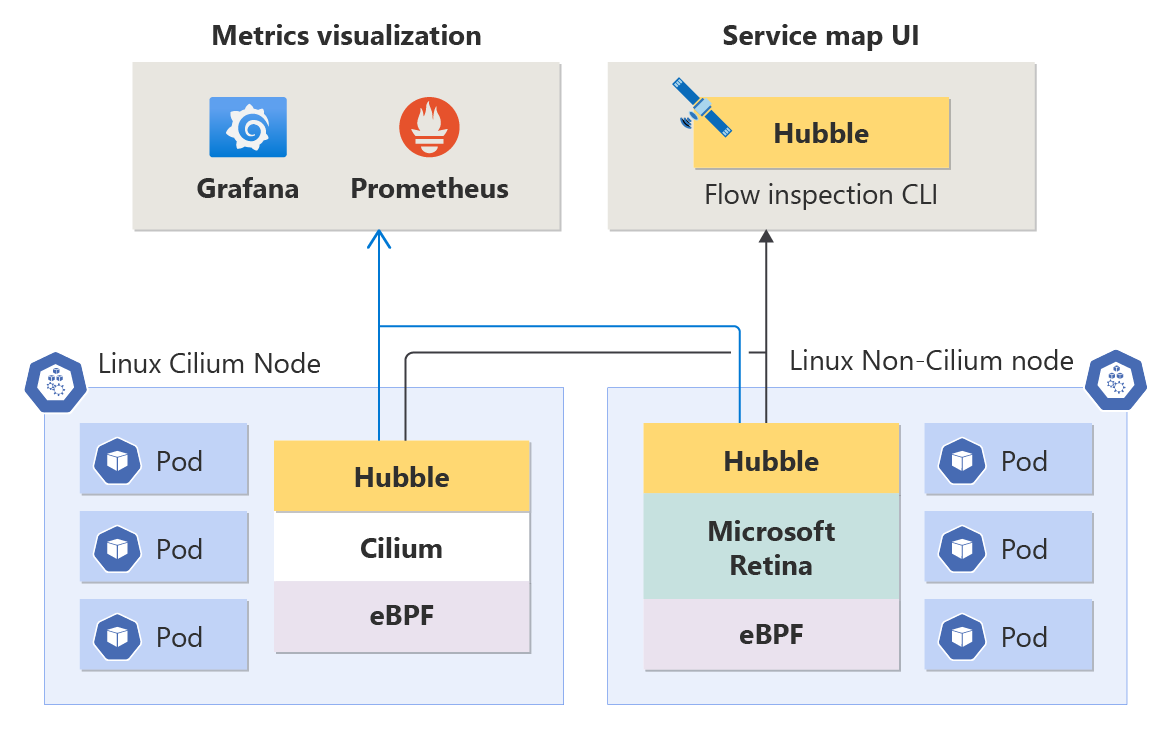

By deploying clusters with Azure CNI overlay, no monitoring is available by default, we need to activate the advanced observability addon.

Advanced Network Observability

This feature is in preview, until it is GA it won't be supported.

With Azure CNI Overlay, there is no observability provided by default.

There is a feature in preview to enable Advance Network Observability

This feature enable Huble flows and UI, and prometheus and Grafana. It cost $0.025 per node per hour.

Export logs

But there is no way to export the logs as of now.

We need to rely on another COTS, like Tetragon or a service mesh like linkerd.

Upgrade existing cluster to Overlay ?

As the full procedure provided by Azure is not GA, migration is not possible.

The only way to get a K8saas cluster with CNI Overlay is by asking the creation of a new cluster.