Requests and limits

In this document, Resources requests, limits and Quality of Service (QoS) will be explained.

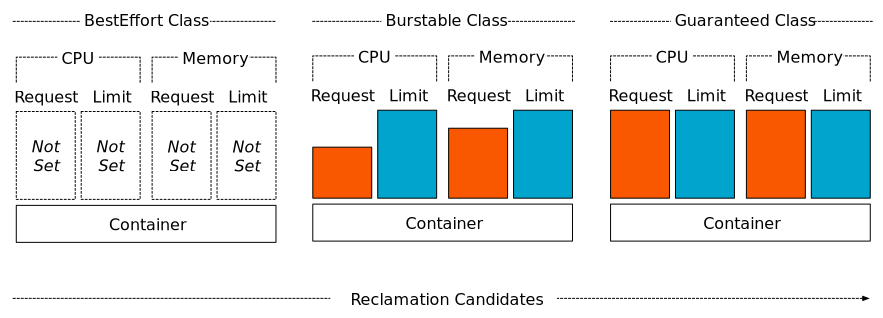

QoS class is assigned to pods by Kubernetes as following examples and it can be set by changing resource requests and limits definitions.

One of the challenges of every distributed system designed to share resources between applications, like Kubernetes, is, paradoxically, how to properly share the resources. Applications were typically designed to run standalone in a machine and use all of the resources at hand. It is said that good fences make good neighbors.

Explaining pod requests and limits

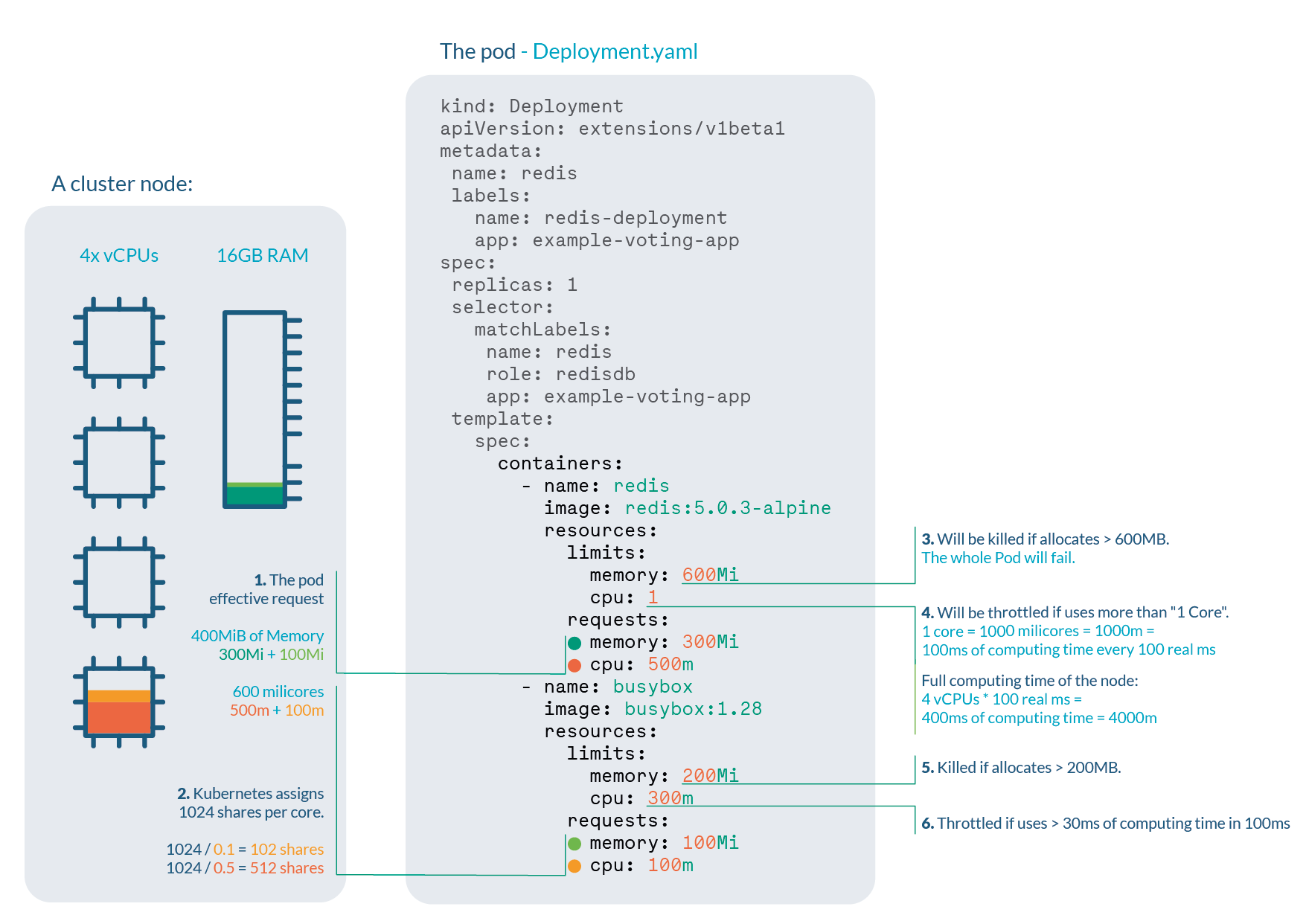

Let’s consider this example of a deployment:

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: redis

labels:

name: redis-deployment

app: example-voting-app

spec:

replicas: 1

selector:

matchLabels:

name: redis

role: redisdb

app: example-voting-app

template:

spec:

containers:

- name: redis

image: redis:5.0.3-alpine

resources:

limits:

memory: 600Mi

cpu: 1

requests:

memory: 300Mi

cpu: 500m

- name: busybox

image: busybox:1.28

resources:

limits:

memory: 200Mi

cpu: 300m

requests:

memory: 100Mi

cpu: 100m

Let’s say we are running a cluster with, for example, 4 cores and 16GB RAM nodes. We can extract a lot of information:

-

Pod effective request is 400 MiB of memory and 600 millicores of CPU. You need a node with enough free allocatable space to schedule the pod.

-

CPU shares for the redis container will be 512, and 102 for the busybox container. Kubernetes always assign 1024 shares to every core, so:

- redis: 1024 * 0.5 cores ≅ 512

- busybox: 1024 * 0.1cores ≅ 102

-

Redis container will be OOM killed if it tries to allocate more than 600MB of RAM, most likely making the pod fail.

-

Redis will suffer CPU throttle if it tries to use more than 100ms of CPU in every 100ms, (since we have 4 cores, available time would be 400ms every 100ms) causing performance degradation.

-

Busybox container will be OOM killed if it tries to allocate more than 200MB of RAM, resulting in a failed pod.

-

Busybox will suffer CPU throttle if it tries to use more than 30ms of CPU every 100ms, causing performance degradation.

Choosing pragmatic requests and limits

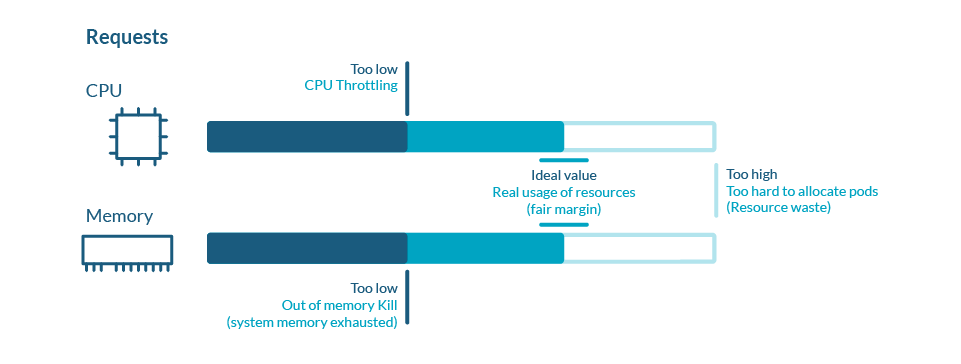

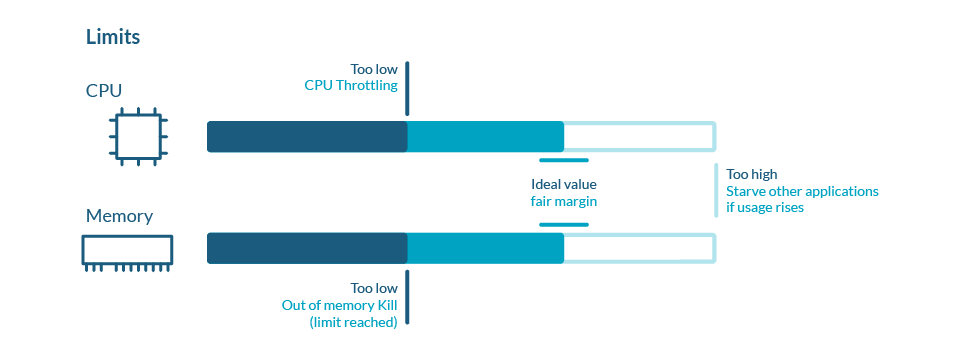

When you have some experience with Kubernetes, you usually understand (the hard way) that properly setting requests and limits is of utmost importance for the performance of the applications and cluster.

In an ideal world, your pods should be continuously using exactly the amount of resources you requested. But the real world is a cold and fickle place, and resource usage is never regular or predictable. Consider a 25% margin up and down the request value as a good situation. If your usage is much lower than your request, you are wasting money. If it is higher, you are risking performance issues in the node.

Regarding limits, achieving a good setting is a matter of try and catch. There is no optimal value for everyone as it hardly depends on the nature of the application, the demand model, the tolerance to errors and many other factors.

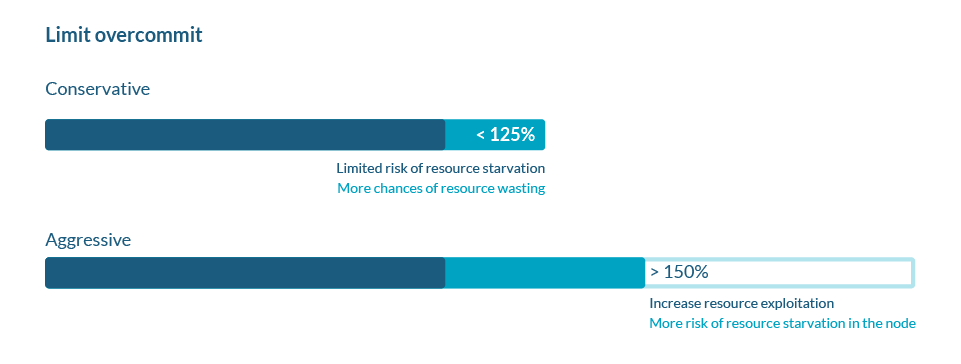

Another thing to consider is the limit overcommit you allow on your nodes.

The enforcement of these limits are on the user, as there is no automatic mechanism to tell Kubernetes how much overcommit to allow.

QoS of Pods

Quality of Service (QoS) class is a Kubernetes concept that the scheduler uses for deciding the scheduling and eviction priority of the pods.

- Guaranteed

- Burstable

- BestEffort

To explain QoS classes, “request” and “limit” concepts of cpu and memory must be known. Request is resource that container guaranteed to get and limit is resource that container gets max.

1. Guaranteed

1.a. How to set a Pod as Guaranteed

Guaranteed class means that request and limit resource values are same. Because, in every situation container will have exact same cpu and memory.

When request and limit resources are same, qosClass is marked as below:

apiVersion: v1

kind: Pod

metadata:

name: static-web

labels:

role: myrole

spec:

containers:

- name: web

image: nginx

resources:

requests:

cpu: 100m

memory: 250Mi

limits:

cpu: 100m

memory: 250Mi

qosClass: Guaranteed

1.b. How Kubernetes Scheduler Manages Guaranteed Pods

The Kubernetes scheduler manages Guaranteed pods by ensuring that the sum of both memory and CPU requests for all containers is lower than the total CPU and memory of the node.

These pods are most prior and will not be killed until system exceed resource limit.

2. Burstable

2.a. How to set a Pod as Burstable

Pod is assigned as “Burstable” class when container has more memory or cpu limit than request value.

This class should be used when pod requires a range of cpu or memory usage. Values can be between request and limit definitions.

When limit value is more than request value, qosClass is Burstable as below:

apiVersion: v1

kind: Pod

metadata:

name: static-web

labels:

role: myrole

spec:

containers:

- name: web

image: nginx

resources:

requests:

cpu: 100m

memory: 250Mi

limits:

cpu: 300m

memory: 750Mi

qosClass: Bustable

If there is no limit values, pods are Burstable as below:

apiVersion: v1

kind: Pod

metadata:

name: static-web

labels:

role: myrole

spec:

containers:

- name: web

image: nginx

resources:

requests:

cpu: 100m

memory: 250Mi

qosClass: Bustable

2.b. How Kubernetes Scheduler Manages Burstable Pods

The Kubernetes scheduler cannot ensure that Burstable pods are deployed to nodes that have enough resources for them.

If there is no BestEffort class pod, these pods are killed before Guaranteed class pods when they reached their limit.

3.BestEffort

3.a. How to make a Pod as BestEffort

Pod is labeled as “BestEffort” class when pod has no memory or cpu request or limit definition.

Because of there is no limit definition, pods that are marked as BestEffort can only get memory or cpu that node has.

When there is no limit or request value, qosClass is BestEffort as below:

apiVersion: v1

kind: Pod

metadata:

name: static-web

labels:

role: myrole

spec:

containers:

- name: web

image: nginx

qosClass: BestEffort

3.b. How Kubernetes Scheduler Manages BestEffort Pods

BestEffort pods are not guaranteed to be managed on to nodes that have enough resources for them. They are able to use any amount of free resources on the node. This can be at times lead to resource issues with other pods.

These classes of pods are lowest prior and will be killed first if system has no memory.

Evictions

4.a. How are Guaranteed, Burstable and BestEffort Pods Evicted by the Kubelet?

Pod evictions are initiated by the Kubelet when the node starts running low on compute resources. These evictions are meant to reclaim resources to avoid a system out of memory (OOM) event. DevOps can specify thresholds for resources which when breached trigger pod evictions by the Kubelet.

The QoS class of a pod does affect the order in which it is chosen for eviction by the Kubelet. Kubelet first evicts BestEffort and Burstable pods using resources above requests. The order of eviction depends on the priority assigned to each pod and the amount of resources being consumed above request.

Guaranteed and Burstable pods not exceeding resource requests are evicted next based on which ones have the lowest priority.

Both Guaranteed and Burstable pods whose resource usage is lower than the requested amount are never evicted because of the resource usage of another pod. They might, however, be evicted if system daemons start using more resources than reserved. In this case, Guaranteed and Burstable pods with the lowest priority are evicted first.

When responding to DiskPressure node condition, the Kubelet first evicts BestEffort pods followed by Burstable pods. Only when there are no BestEffort or Burstable pods left are Guaranteed pods evicted.

4.b. What is the Node Out of Memory (OOM) Behaviour for Guaranteed, Burstable and BestEffort pods?

If the node runs out of memory before the Kubelet can reclaim it, the oom_killer kicks in to kill containers based on their oom_score. The oom_score is calculated by the oom_killer for each container and is based on the percentage of memory the container uses on the node as compared to what it requested plus the oom_score_adj score.

The oom_score_adj for each container is governed by the QoS class of the pod it belongs to. For a container inside a Guaranteed pod the oom_score_adj is “-998”, for a Burstable pod container it is “1000” and for a BestEffort pod container “min(max(2, 1000 - (1000 * memoryRequestBytes) / machineMemoryCapacityBytes), 999)”.

The oom_killer first terminates containers that belong to pods with the lowest QoS class and which most exceed the requested resources. This means that containers belonging to a pod with a better QoS class (like Guaranteed) have a lower probability of being killed than one’s with Burstable or BestEffort QoS class.

This, however, is not true of all cases. Since the oom_killer also considers memory usage vs request, a container with a better QoS class might have a higher oom_score because of excessive memory usage and thus might be killed first.

##Conclusion

Kubernetes scheduler schedules pods based on their QoS class values. QoS class of a pod is assigned by its resource limits and requests definitions of containers. These classes have impacts on the resource utilization of nodes. In order to utilize available resources efficiently, they must be considered.